DirectCore® Software

- Overview

- Functionality

- Multiuser Operation

- TI-RTOS and Bare Metal Support

- Debug Capabilities

- Unified Core List

- Symbol Lookup

- DirectCore Host and Guest Drivers

- DirectCore APIs

- Software Model

- Minimum Host Application Source Code Example

- Mailbox Create Examples

- CardParams Struct

- Installing / Configuring VMs

- Linux Support

- C667x Cards

- Legacy "Hardware Products" Page

- Legacy "Software Products" Page

Overview

DirectCore® software is a shared library module, part of the SigSRF software SDK ◳. DirectCore is deployed by Tier 1 carriers and LEAs as part of SBC, media gateway, and Lawful Interception applications. SigSRF software, including DirectCore, is the basis of the DeepLI™ Lawful Interception solution, which is deployed by FBI level LEAs and thus indirectly used by carriers, ISPs, and IMs such as Skype, Google Voice, and WhatsApp. DirectCore currently supports Ubuntu, CentOS, and Redhat Linux, providing key SigSRF functionality:- System-wide platform management interface for x86 applications, including multiple VMs and multiple users

- Direct interface to multicore CPU PCIe cards for user-defined C/C++ programs. Functions include card control, memory transfers, multiple card support ("pool of cores"), core assignment in VMs, c66x core executable file download, multiuser support (using a VirtIO driver), and more

- Direct interface to legacy off-the-shelf DSP/acquisition hardware for user-defined C/C++ programs. Functions include data acquisition, waveform record/playback, signal synthesis, stimulus & response measurement, and DSP/math functions

DirectCore was formerly called "DirectDSP" and also supported Windows C/C++ applications, Visual Basic ◳, and MATLAB® ◳. If you need support for legacy applications, or for new Windows

C/C++, VB, or MATLAB applications, please contact Signalogic.

DirectCore was formerly called "DirectDSP" and also supported Windows C/C++ applications, Visual Basic ◳, and MATLAB® ◳. If you need support for legacy applications, or for new Windows

C/C++, VB, or MATLAB applications, please contact Signalogic.

Functionality

Functionality provided by DirectCore includes:- For x86 platforms

- System-wide platform management API, including multiple VMs and multiple users

- Provide waveform file APIs for .wav, .au, compressed bitstream data, and other formats

- For coCPU™ platforms, additional functionality includes

- Provide higher performance communication and data transfer to/from multicore CPU and DSP code than is possible with JTAG or other JTAG-based solutions, such as RTDX

- (Legacy Only) Support standard, popular development environments such as Visual Studio, .NET, and MATLAB

- Abstract coCPU hardware drivers to allow a wide range of Linux distribution, bare-metal, and VMs. (For legacy hardware, this works for Win2K, Linux, Win9x and wide range of boards including C5xxx, C6xxx, DSK, EVM, off-the-shelf vendor, etc)

- Provide "algorithm level" debug capability: allow developers to work with real-time data buffers and streams in an event-driven environment, using single-step, breakpoint, and register level debug level only when necessary

- Enable host GUI development (i.e. connect GUI controls and displays to coCPU code variables and buffers) for production applications and systems

Multiuser Operation

As expected in high level Linux applications, DirectCore allows true multiuser operation, without time-slicing or batch jobs. Multiple host and VM instances can allocate and utilize c66x resources concurrently. How this works is described in sections below (see DirectCore Host and Guest Drivers and DirectCore Libraries).TI-RTOS and Bare Metal Support

DirectCore supports both TI-RTOS and bare metal applications.Debug Capabilities

- Both local reset and hard reset methods supported. Hard reset can be activated as a backup method in situations where a c66x device has network I/O in the "Tx descriptor stuck" state, or DDR3 memory tests are not passing

- Core dumps and exception handling statistics can be logged and displayed. For bare metal applications, exception handling is provided separately from standard TI-RTOS functionality

- Execution Trace Buffer (ETB) readout and display (note -- this capability is in work)

Unified Core List

DirectCore merges all c66x cards in the system, regardless of number of CPUs per card, and presents a unified list of cores. This is consistent with Linux multicore models and is required to support virtualization. Most DirectCore APIs accept a core list parameter (up to 64), allowing API functionality to be applied to one or more cores as needed. Within one host or VM instance, for cores lists longer than 64, multiple "logical card" handles can be opened using the DSAssignCard() API.Symbol Lookup

DirectCore provides physical and logical addresses for c66x source code symbols (variables, structures, arrays, etc). A symbol lookup cache reduces overhead for commonly used symbols.DirectCore Host and Guest Drivers

DirectCore drivers interact with c66x PCIe cards from either host or guest instances (VMs). Host instances use a "physical" driver and VMs use virtIO "front end" drivers. Drivers are usually loaded upon server boot, but can be loaded and unloaded manually. Some notable driver capabilities:- c66x applications can share host memory with host applications, and also share between c66x cores

- multiple PCIe lanes are used concurrently when applicable

- both 32-bit and 64-bit BAR modes are supported

DirectCore APIs

DirectCore libraries provide a high level API for applications, and are used identically by both host and guest (VM) instances. Some notes about DirectCore APIs:- DirectCore libraries abstract all c66x cores as a unified "pool" of cores, allowing multiple users / VM instances to share c66x resources, including NICs on the PCIe cards. This applies regardless of the number of PCIe cards installed in the server

- Most APIs have a core list argument, allowing the API to operate on one or more cores simultaneously. This applies to target memory reads and writes; in the case of reads a "read multiple" mode is supported that reads each core into an offset in the specified host memory

- APIs are fully concurrent between applications. The physical driver automatically maximizes PCIe bus bandwidth across multiple c66x CPUs

- APIs are mostly synchronous; reading/writing target memory supports an asynchronous mode where subsequent reads/writes to the same core(s) will block if prior reads/writes have not completed

- Mailbox APIs are supported, allowing asynchronous communication between host and target CPUs

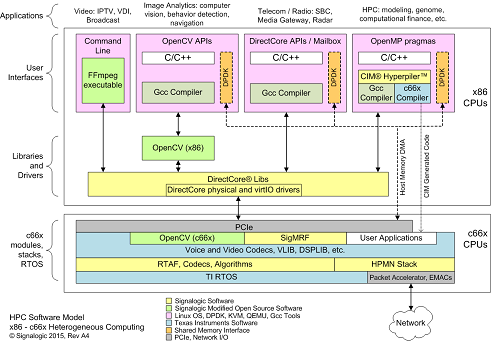

Software Model

Below is a diagram showing where DirectCore libs and drivers fit in the server HPC software architecture for c66x CPUs. Some notes about the above diagram:

Some notes about the above diagram:

- Application complexity increases from left to right (command line, open source library APIs, user code APIs, heterogeneous programming)

- All application types can run concurrently in host or VM instances (see below for VM configuration) c66x CPUs can make direct DMA access to host memory, facilitating use of DPDK. The host memory DMA capability is also used to share data between c66x CPUs, for example in an application such as H.265 (HEVC) encoding, where 10s of cores must work concurrently on the same data set

- c66x CPUs are connected directly to the network. Received packets are filtered by UDP port and distributed to c66x cores at wire speed

Minimum Host Application Source Code Example

For non-transparent, or "hands on" mode, the source example below gives a minimum number of DirectCore APIs to make a working program (sometimes called the Hello World program). From a code flow perspective, the basic sequence is:open assign card handles init reset load download executable code run communicate read/write memory, mailbox messages, etc. reset close free card handles> In the source code example below, some things to look for:

- obtaining a card handle (hCard in the source code)

- DSLoadFileCores() API, which downloads executable files (produced by TI build tools) to one or more c66x cores. Different cores can run different executables

- cimRunHardware() API, which runs code on one or more c66x cores, including synchronization between host and target required to sync values of shared mem C code variables and buffers

- use of a "core list" parameter in most APIs. The core list can span multiple CPUs

/*

$Header: /root/Signalogic/DirectCore/apps/SigC641x_C667x/boardTest/cardTest.c

Purpose:

Minimum application example showing use of DirectCore APIs

Description:

host test program using DirectCore APIs and SigC66xx multicore CPU accelerator hardware

Copyright (C) Signalogic Inc. 2014-2019

Revision History

Created Nov 2014 AKM

Modified Jan 2016, JHB. Simplify for web page presentation (remove declarations, local functions, etc). Make easier to read

*/

#include <stdio.h>

#include <sys/socket.h>

#include <limits.h>

#include <unistd.h>

#include <sys/time.h>

/* Signalogic header files */

#include "hwlib.h" /* DirectCore API header file */

#include "cimlib.h" /* CIM lib API header file */

/* following header files required depending on application type */

#include "test_programs.h"

#include "keybd.h"

/* following shared host/target CPU header files required depending on app type */

#ifdef APP_SPECIFIC

#include "streamlib.h"

#include "video.h"

#include "ia.h"

#endif

/* Global vars */

QWORD nCoreList = 0; /* bitwise core list, usually given in command line */

bool fCoresLoaded = false;

/* Start of main() */

int main(int argc, char *argv[]) {

HCARD hCard = (HCARD)NULL; /* handle to card. Note that multiple card handles can be opened */

CARDPARAMS CardParams;

int nDisplayCore, timer_count;

DWORD data[120];

/* Display program header */

printf("DirectCore minimum API example for C66x host and VM accelerators, Rev 2.1, Copyright (C) Signalogic 2015-2016\n");

/* Process command line for basic target CPU items: card type, clock rate, executable file */

if (!cimGetCmdLine(argc, argv, NULL, CIM_GCL_DEBUGPRINT, &CardParams, NULL)) exit(EXIT_FAILURE);

/* Display card info */

printf("Accelerator card info: %s-%2.1fGHz, target executable file %s\n", CardParams.szCardDescription, CardParams.nClockRate/1e9, CardParams.szTargetExecutableFile);

/* Assign card handle, init cores, reset cores */

if (!(hCard = cimInitHardware(CIM_IH_DEBUGPRINT, &CardParams))) { /* use CIM_IH_DEBUGPRINT flag so cimInitHardware will print error messages, if any */

printf("cimInitHardware failed\n");

exit(EXIT_FAILURE);

}

nCoreList = CardParams.nCoreList;

/*

If application specific items are being used, process the command line again using flags and

structs as listed below (note -- this example gives NULL for the application specific struct)

App Flag Struct Argument (prefix with &)

--- ---- -------------------------------

VDI CIM_GCL_VDI VDIParams

Image Analytics CIM_GCL_IA IAParams

Media Transcoding CIM_GCL_MED MediaParams

Video CIM_GCL_VID VideoParams

FFT CIM_GCL_FFT FFTParams

*/

if (!cimGetCmdLine(argc, argv, NULL, CIM_GCL_DEBUGPRINT, &CardParams, NULL)) goto cleanup;

/* Load executable file(s) to target CPU(s) */

printf("Loading executable file %s to target CPU corelist 0x%lx\n", CardParams.szTargetExecutableFile, nCoreList);

if (!(fCoresLoaded = DSLoadFileCores(hCard, CardParams.szTargetExecutableFile, nCoreList))) {

printf("DSLoadFileCores failed\n");

goto cleanup;

}

/* Run target CPU hardware. If required, give application type flag and pointer to application property struct, as noted in comments above */

if (!cimRunHardware(hCard, CIM_RH_DEBUGPRINT | (CardParams.enableNetIO ? CIM_RH_ENABLENETIO : 0), &CardParams, NULL)) {

printf("cimRunHardware failed\n"); /* use CIM_RH_DEBUGPRINT flag so cimRunHardware will print any error messages */

goto cleanup;

}

nDisplayCore = GetDisplayCore(nCoreList);

DSSetCoreList(hCard, (QWORD)1 << nDisplayCore);

printf("Core list used for results display = 0x%llx\n", (unsigned long long)((QWORD)1 << nDisplayCore));

/* Start data acquisition and display using RTAF components */

setTimerInterval((time_t)0, (time_t)1000);

printf("Timer running...\n");

while (1) { /* we poll with IsTimerEventReady(), and use timer events to wake up and check target CPU buffer ready status */

ch = getkey(); /* look for interactive keyboard commands */

if (ch >= '0' && ch <= '9') {

nDisplayCore = ch - '0';

DSSetCoreList(hCard, (QWORD)1 << nDisplayCore);

}

else if (ch == 'Q' || ch == 'q' || ch == ESC) goto cleanup;

if (IsTimerEventReady()) {

/* check to see if next data buffer is available */

if ((new_targetbufnum[nDisplayCore] = DSGetProperty(hCard, DSPROP_BUFNUM)) != targetbufnum[nDisplayCore]) {

targetbufnum[nDisplayCore] = new_targetbufnum[nDisplayCore]; /* update local copy of target buffer number */

printf("Got data for core %d... count[%d] = %d\n", nDisplayCore, nDisplayCore, count[nDisplayCore]++);

if (dwCode_count_addr != 0) {

DSReadMem(hCard, DS_RM_LINEAR_PROGRAM | DS_RM_MASTERMODE, dwCode_count_addr, DS_GM_SIZE32, &timer_count, 1);

printf("Timer count value = %d\n", timer_count);

}

/* read data from target CPUs, display */

if (DSReadMem(hCard, DS_RM_LINEAR_DATA | DS_RM_MASTERMODE, dwBufferBaseAddr + nBufLen * 4 * hostbufnum[nDisplayCore], DS_GM_SIZE32, (DWORD*)&data, sizeof(data)/sizeof(DWORD))) {

hostbufnum[nDisplayCore] ^= 1; /* toggle buffer number, let host know */

DSSetProperty(hCard, DSPROP_HOSTBUFNUM, hostbufnum[nDisplayCore]);

for (int i=0; i<120; i+=12) {

for (int j=0; j<12; j++) printf("0x%08x ", data[i+j]); printf("\n");

}

}

}

}

}

cleanup:

if (fCoresLoaded) SaveC66xLog(hCard);

printf("Program and hardware cleanup, hCard = %d\n", hCard);

/* Hardware cleanup */

if (hCard) cimCloseHardware(hCard, CIM_CH_DEBUGPRINT, nCoreList, NULL);

}

/* Local functions */

int GetDisplayCore(QWORD nCoreList) {

int nDisplayCore = 0;

do {

if (nCoreList & 1) break;

nDisplayCore++;

} while (nCoreList >>= 1);

return nDisplayCore;

}

Mailbox Create Examples

Send and receive mailbox creation API examples are shown below. Send means host CPU cores are sending mail to target CPU cores, and receive means host CPU cores are receiving messages from target CPU cores./* Allocate send mailbox handle (send = transmit, or tx) */

if (tx_mailbox_handle[node] == NULL) {

tx_mailbox_handle[node] = malloc(sizeof(mailBoxInst_t));

if (tx_mailbox_handle[node] == NULL) {

printf("Failed to allocate Tx mailbox memory for node = %d\n", node);

return -1;

}

}

/* Create send mailbox */

mailBox_config.mem_start_addr = host2dspmailbox + (nCore * TRANS_PER_MAILBOX_MEM_SIZE);

mailBox_config.mem_size = TRANS_PER_MAILBOX_MEM_SIZE;

mailBox_config.max_payload_size = TRANS_MAILBOX_MAX_PAYLOAD_SIZE;

if (DSMailBoxCreate(hCard, tx_mailbox_handle[node], MAILBOX_MEMORY_LOCATION_REMOTE, MAILBOX_DIRECTION_SEND, &mailBox_config, (QWORD)1 << nCore) != 0) {

printf("Tx DSMailboxCreate() failed for node: %d\n", node);

return -1;

}

/* Allocate receive mailbox handle (receive = rx) */

if (rx_mailbox_handle[node] == NULL) {

rx_mailbox_handle[node] = malloc(sizeof(mailBoxInst_t));

if (rx_mailbox_handle[node] == NULL) {

printf("Failed to allocate Tx mailbox memory for node = %d\n", node);

return -1;

}

}

/* Create receive mailbox */

mailBox_config.mem_start_addr = dsp2hostmailbox + (nCore * TRANS_PER_MAILBOX_MEM_SIZE);

if (DSMailboxCreate(hCard, rx_mailbox_handle[node], MAILBOX_MEMORY_LOCATION_REMOTE, MAILBOX_DIRECTION_RECEIVE, &mailBox_config, (QWORD)1 << nCore) != 0) {

printf("Rx DSMailboxCreate() failed for node: %d\n", node);

return -1;

}

Mailbox Query and Read Examples

Source code excerpts with mailbox query and read API examples are shown below. Some code has been removed for clarity. These examples are processing "session" actions, for example a media transcoding application. Other application examples would include streams (video), nodes (analytics), etc.

/* query and read mailboxes on all active cores */

nCore = 0;

do {

if (nCoreList & ((QWORD)1 << nCore)) {

ret_val = DSQueryMailbox(hCard, (QWORD)1 << nCore);

if (ret_val < 0) {

fprintf(mailbox_out, "mailBox_query error: %d\n", ret_val);

continue;

}

while(ret_val-- > 0) {

ret_val = DSReadMailbox(hCard, rx_buffer, &size, &trans_id, (QWORD)1 << nCore);

if (ret_val < 0) {

fprintf(mailbox_out, "mailBox_read error: %d\n", ret_val);

continue;

}

memcpy(&header_in, rx_buffer, sizeof(struct cmd_hdr));

if (header_in.type == DS_CMD_CREATE_SESSION_ACK) {

:

:

}

else if (header_in.type == DS_CMD_DELETE_SESSION_ACK) {

:

:

}

else if (header_in.type == DS_CMD_EVENT_INDICATION) {

:

:

}

}

}

nCore++;

} while (nCoreList >> 1);

CardParams Struct

The CardParams struct shown in the above "minimum" source code example is given here.

typedef struct {

/* from command line */

char szCardDesignator[CMDOPT_MAX_INPUT_LEN];

char szTargetExecutableFile[CMDOPT_MAX_INPUT_LEN];

unsigned int nClockRate;

QWORD nCoreList;

/* derived from command line entries */

char szCardDescription[CMDOPT_MAX_INPUT_LEN];

unsigned int maxCoresPerCPU;

unsigned int maxCPUsPerCard;

unsigned int maxActiveCoresPerCard;

unsigned int numActiveCPUs; /* total number of currently active CPUs (note: not max CPUs, but CPUs currently in use) */

unsigned int numActiveCores; /* total number of currently active cores (note: not max cores, but cores currently in use) */

bool enableNetIO; /* set if command line params indicate that network I/O is needed. Various application-specific params are checked */

WORD wCardClass;

unsigned int uTestMode;

bool enableTalker; /* not used for c66x hardware */

CIMINFO cimInfo[MAXCPUSPERCARD];

} CARDPARAMS; /* common target CPU and card params */

typedef CARDPARAMS* PCARDPARAMS;

Installing / Configuring VMs

Below is a screen capture showing VM configuration for c66x accelerator cards, using the Ubuntu Virtual Machine Manager (VMM) user interface:

c66x core allocation is transparent to the number of PCIe cards installed in the system; just like installing memory DIMMs of different sizes, c66x cards can be mixed and matched.